Date : 10/06/2023

Relevance : GS 3 : Science and Technology, GS 4 : Ethics

Key Words: AI bias, Artificial intelligence,Transparency and Accountability, Social good

Context-

- Artificial intelligence (AI) is a rapidly developing technology with the potential to revolutionize many aspects of our lives. However, there is a growing concern that AI could be used to perpetuate existing biases and discrimination. This is a serious problem, as it could have a significant impact on the lives of people from marginalized groups.

- There has been a rise in warnings against Artificial Intelligence (AI) by technology leaders, researchers and experts, including Geoffrey Hinton (artificial intelligence pioneer), Elon Musk (co-founder and CEO of Tesla), Emad Mostaque (British AI expert), Cathy O’Neil (American mathematician, data scientist, and author), Stuart J. Russell (British computer scientist), and many others.

- Recently, the World Health Organization called for caution in using AI for public health care.

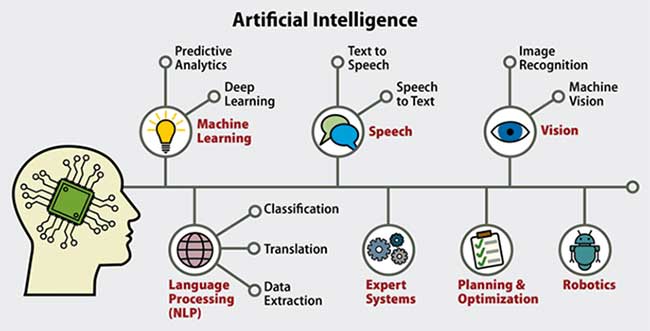

What is Artificial Intelligence ?

Artificial intelligence is the simulation of human intelligence processes by machines, especially computer systems. Specific applications of AI include expert systems, natural language processing, speech recognition and machine vision.

How AI Bias Can Occur?

- AI algorithms can learn from and project the past into the future. This means that they can perpetuate existing biases and discrimination, if the data they are trained on is biassed.For example, algorithms can potentially sift through volumes of resumes, career trajectories, and the performance of previous employees in an organisation and learn that men are more productive than women in general.

- A recent report by the United Nations Development Programme highlighted that ‘algorithmic bias has the greatest impact on financial services, healthcare, retail, and gig worker industries’. This report has also flagged that AI-generated credit scoring exhibited a tendency to assign lower scores to females compared to males, even when their financial backgrounds were similar. Research on application in health-care diagnostics has identified significant biases against people of colour since most of the data used to train the model was from the United States and China, lacking representation of all communities even from within these countries.

- While these algorithms are designed to improve through feedback loops and programmatic corrections, they lack a moral compass; and unlike humans, they do not question stereotypes, norms, culture or traditions. Machines do not have a sense of fairness or empathy that a society, especially with minorities and disempowered communities, leans on. Furthermore, machines generalise learnt patterns to the population without knowing if the data used to train them are diverse and complete, with adequate representation from all communities and groups affected by their application.

- With the background of the Indian States, their many languages, colours, cultures, and traditions, this threat can propagate more inequity and further exclusion for marginalised groups and minorities, which can translate into high costs in terms of livelihoods, opportunities, well-being, and life.

Others Risks associated with AI

- Superintelligence: Its intelligence would increase exponentially in an intelligence explosion and could dramatically surpass humans.

- Technological unemployment: AI could lead to job displacement, as machines become capable of performing tasks that are currently done by humans. This could lead to increased unemployment and social unrest.

- Bad actors and weaponized AI: Terrorists, criminals and rogue states may use other forms of weaponized AI such as advanced digital warfare and lethal autonomous weapons. By 2015, over fifty countries were reported to be researching battlefield robots.

- Privacy violations: AI systems could be used to collect and analyze large amounts of data about people, which could be used to violate their privacy.

How to Address AI Bias?

- We need clean, organised, digitised, and well-governed public data to build algorithms and models that benefit our people. Both industry and governments must exercise caution and invest sufficiently in its research, development and scrutiny before embracing this innovation.

- While the challenge of available data volumes can be addressed due to the scale at which our administration and services operate, it is imperative that we prioritise the development of AI in a responsible and informed manner. For Example,NITI Aayog’s initiative for Responsible AI.

- It is important to ensure that the data used to train AI algorithms is representative of the population as a whole. This means including data from people from all walks of life, including those from marginalized groups

Conclusion

- The private sector and practitioners should work collaboratively with governments on this journey. It is crucial to emphasise that the goal must be to build intelligent machines that are inclusive and reflect the country’s diversity and heterogeneity. Our adoption of this innovation should not hinder our progress towards equality and equity for all; it should support our reforms and endeavours for positive change.

- The use of AI in India has the potential to be a powerful tool for social good. However, it is important to be aware of the potential for AI bias and to take steps to mitigate this risk. By taking these steps, we can ensure that AI is used to benefit all members of society, regardless of their background.

Probable Questions for Mains Exam-

- Question 1: What is Artificial intelligence? What AI should be deployed in India in the light of “NITI Aayog initiative for Responsible AI “? (10 Marks.150 Words)

- Questions 2 : ‘’’ AI has the potential to be a powerful tool for social good’’. Justify this statement in Indian Context. What are the potential for AI bias? What steps should be taken to mitigate this risk? (15 Marks, 250 Words)

Source : The Hindu