Context-

Since its inception, artificial intelligence (AI) has continued transforming many parts of modern life, from improving medical diagnosis to streamlining supply networks. However, alongside these benefits, AI has also given rise to technologies that pose significant risks. One such technology is deepfake, which creates hyper-realistic but entirely fabricated audio and visual content. Deepfakes have become a pressing concern for governments, security agencies, and civil societies worldwide in recent years.

Understanding Deepfakes

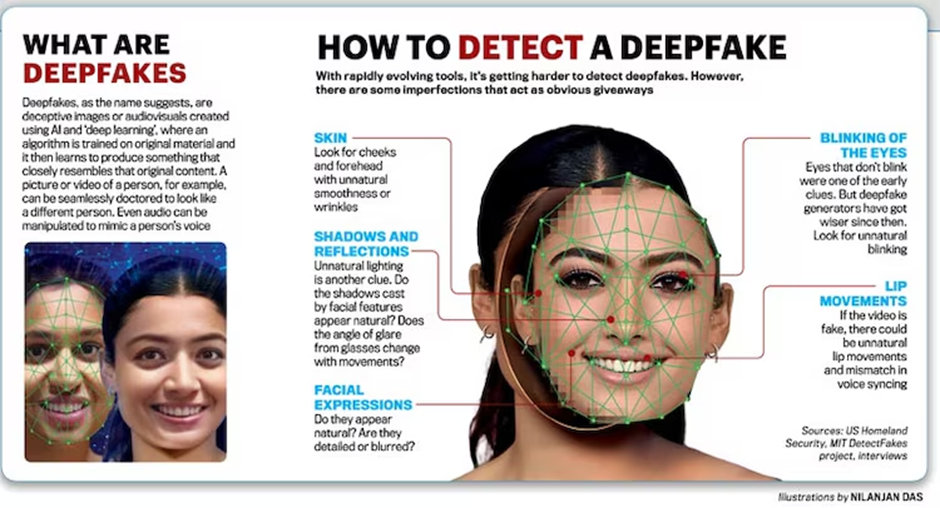

- The Technology Behind Deepfakes : Deepfakes are synthetic media generated through advanced artificial intelligence techniques, generally created using machine learning (ML) through Generative Adversarial Networks (GANs). In the GAN process, two neural networks, the generator, and the discriminator, are trained against each other. The generator creates synthetic data like photos, audio files, or video clips, while the discriminator detects fake data. This iterative process continues until the generator's performance improves to the point where the discriminator can no longer distinguish between real and fake data.

- Early Developments and Popularity : In 2017, FaceApp Technology Limited launched “FaceApp,” a photo and video editing app for iOS and Android devices, using AI-powered neural networks to create realistic alterations of human faces in still photos. In August 2019, the Chinese face-swap application Zao gained popularity for allowing users to replace celebrities' faces with their own in video clips. Despite its unique features, Zao raised digital privacy concerns since it wasn't available through primary app stores.

- Advancements and Future Prospects : In 2019, the Japanese start-up DataGrid developed a deepfake to create full-body models of non-existent humans. DataGrid's AI algorithm can generate an infinite number of realistic-looking persons, morphing into new but non-existent faces and showing various positions and looks. Deepfakes can convincingly alter individuals' speech, facial expressions, and other characteristics, making it appear they are saying or doing things they never did. Initially seen as a novelty, the potential for misuse has become increasingly apparent.

Deepfake as a Threat

- Threat to Political Stability : Political stability is significantly threatened by deepfakes in Bharat's polarized and competitive political landscape. Deepfakes can undermine political opponents, spread misinformation, and manipulate public opinion. Fabricated videos of political leaders making inflammatory statements could incite violence, disrupt elections, or diminish public trust in democratic institutions. The rapid dissemination of false information through social media platforms exacerbates this threat.

- Threat to Social Cohesion and Communal Harmony : Bharat's diversity in religions, languages, and cultures makes maintaining social cohesion and communal harmony a challenge. Deepfakes can stoke communal tensions by creating fake videos showing one community attacking another. Such content can go viral quickly, leading to violence and riots, as seen with misinformation regarding the Citizenship Amendment Act (CAA) in February 2020. The psychological impact of false evidence can make it difficult to quell unrest.

- Threat to National Security and Defence : On February 18, 2022, a deepfake video of Ukrainian President Volodymyr Zelensky falsely informing Ukrainians that their troops had surrendered highlighted the use of deepfake technology during military conflicts. Deepfakes can demoralize troops, spread false orders, or create confusion within the ranks. A deepfake video showing a senior military official surrendering or issuing a false command could devastate morale and operational effectiveness. Deepfakes could also manipulate diplomatic communications and create false narratives, influencing international relations.

- Impact on the Economy : The economic implications of deepfakes are significant. In a digital economy, trust is paramount. Deepfakes can erode confidence in digital transactions, electronic communications, and financial markets. In a 2019 cyber scam, a fraudster used AI-enabled deepfake audio to spoof Rudiger Kirsch, CEO of Euler Hermes Group SA, leading to a wire transfer of €220,000 (approximately ₹1.7 Crore) to a Hungary-based supplier. This highlighted the use of deepfakes for financial fraud. Fraudsters can employ deepfakes to impersonate officials, authorize fraudulent transactions, or propagate misinformation affecting stock prices, leading to significant financial losses and eroding trust in the economic system.

Countermeasures and Policy Responses

Deepfakes are one of the developments in artificial intelligence. Fake news or misinformation generated by AI/machine learning algorithms has gained traction in recent years. In November 2018 and 2019, three scientific papers suggested using the ‘face-warping artifacts and inconsistent head poses’ technique to detect and counter deepfakes by analyzing the facial expressions and movements of an individual’s speaking pattern. However, in the long run, these techniques may not work as the deepfake developers are likely to improve their discriminative neural networks and further improve deepfakes.

- Technological Solutions : Investing in and improving the AI-based detection systems that can identify deepfakes with high accuracy is essential. These systems should be integrated into social media platforms and other critical digital infrastructure.

- Legislation and Regulation : Enacting robust laws that criminalize creating and disseminating malicious deepfakes is crucial. This should be coupled with regulations that mandate digital platforms to take down harmful deepfake content swiftly.

- Public Awareness : Educating the public about the existence and dangers of deepfakes can reduce the likelihood of such content being believed and spread. Media literacy programs should be integrated into the education system and public information campaigns. Governments and organizations must develop a ‘response’ campaign against the spread of misinformation, treating it as a security incident rather than a nuisance.

- International Cooperation : Deepfakes are a global phenomenon; therefore, international cooperation is needed to combat them effectively. Bharat should collaborate with other nations and international organizations to share knowledge, best practices, and technological solutions.

- Psychological Resilience : Developing the psychological resilience of the population is equally important. Citizens should be trained to critically assess the content they encounter and avoid sharing unverified information.

Conclusion

Deepfakes represent a significant and evolving threat to Bharat’s national security. They have the potential to undermine political stability, disrupt social harmony, and compromise national defense. Mitigating these threats requires a comprehensive approach involving technological, legal, and societal measures. As Bharat continues to advance in the digital era, it must remain vigilant and proactive in addressing the challenges posed by deepfakes to ensure a secure and harmonious future.

|

Probable Questions for UPSC Mains Exam-

|

Source- VIF